🤖 Understanding Large Language Models (LLMs)

To begin, let’s delve into the concept of Large Language Models (LLMs). At their core, LLMs are complex algorithms that generate human-like text based on patterns learned from vast amounts of data. They operate in a non-deterministic manner, meaning their responses are not fixed or pre-determined but are instead generated based on probabilities derived from their training data. This allows them to produce varied and contextually relevant responses to a wide range of inputs.

LLMs function by predicting the next word in a sequence of words, using patterns and relationships they’ve identified in the data they’ve been trained on. This training process involves processing massive datasets, such as books, articles, websites, and other text sources, to understand the nuances of human language.

🧠 Specialized vs. General Models

It’s important to note that not all LLMs are created equal. Some models are designed to be generalists, capable of generating text on a wide variety of topics. These models are typically very large and have been trained on diverse datasets, enabling them to handle a wide range of queries. However, their broad training can also mean they lack depth in specialized areas.

On the other hand, there are LLMs specifically fine-tuned for particular domains, such as medicine, law, or technical fields. These specialized models are trained on domain-specific datasets, allowing them to generate more accurate and relevant responses within their area of expertise.

📏 Model Size and Response Time

The size of an LLM—measured in the number of parameters or the amount of training data used—also plays a crucial role in its performance and response time. Larger models, with billions or even trillions of parameters, typically provide more nuanced and context-aware responses due to their extensive training. However, this size comes at a cost: larger models require more computational power and time to generate responses, which can lead to slower response times.

🔍 Limitations of LLMs

Have you ever wondered why ChatGPT doesn’t always give correct answers?

While LLMs have demonstrated remarkable capabilities in natural language understanding and generation, they also have limitations. Because they are trained on static datasets, they do not have access to real-time information or knowledge that has emerged after their training period.

Moreover, their reliance on patterns and probabilities means they might generate plausible-sounding but incorrect or nonsensical responses, particularly when faced with ambiguous or highly specific queries.

I’ve heard of that… or did I just imagine that I heard it?

In addition, LLMs can struggle with tasks that require logical reasoning or precise calculations, as they were not specifically trained for such purposes. For example, a general-purpose LLM may provide creative and contextually appropriate responses to a wide range of queries, but it may falter when asked to solve a complex mathematical equation or analyze a chess position accurately.

By understanding these dynamics, we can better appreciate the strengths and limitations of LLMs and make informed decisions about their use in various applications.

🌟 AI Agents

With the growing capabilities of LLMs, we are witnessing a significant leap towards the realization of sophisticated AI systems, often referred to as AI Agents. These AI Agents leverage the strengths of LLMs and other tools to perform complex tasks autonomously. By integrating various components and techniques, AI Agents aim to replicate, and sometimes exceed, human-like decision-making and problem-solving abilities.

⚠️ The Challenges of AI Agents

AI agents are like digital butlers — always ready to assist, but sometimes they might serve you coffee in a sock instead of a cup.

Despite their impressive capabilities, AI Agents face a range of challenges that must be addressed to ensure their reliability, security, and ethical deployment. These challenges include:

-

Robustness in Unpredictable Environments: AI Agents often operate in dynamic and unpredictable environments where new, unseen scenarios can occur. Ensuring that these agents are robust enough to handle unexpected inputs or situations without failing or producing harmful outcomes is a significant challenge.

-

Security Vulnerabilities: AI Agents are susceptible to various types of cyber attacks, including data breaches and adversarial attacks. One notable threat is prompt injection attacks, where malicious users craft specific inputs designed to manipulate the behavior of the AI Agent.

-

Data Privacy and Compliance: AI Agents often require access to large datasets, which may include sensitive personal information. Protecting this data from unauthorized access and ensuring compliance with privacy regulations (such as GDPR) is a critical challenge.

-

Complexity in Debugging: As AI Agents grow more complex, debugging and maintaining these systems becomes increasingly difficult. Identifying the root cause of a problem in an AI system, especially one that learns and adapts over time, can be a daunting task.

🧩 Core Components of Advanced AI Agents

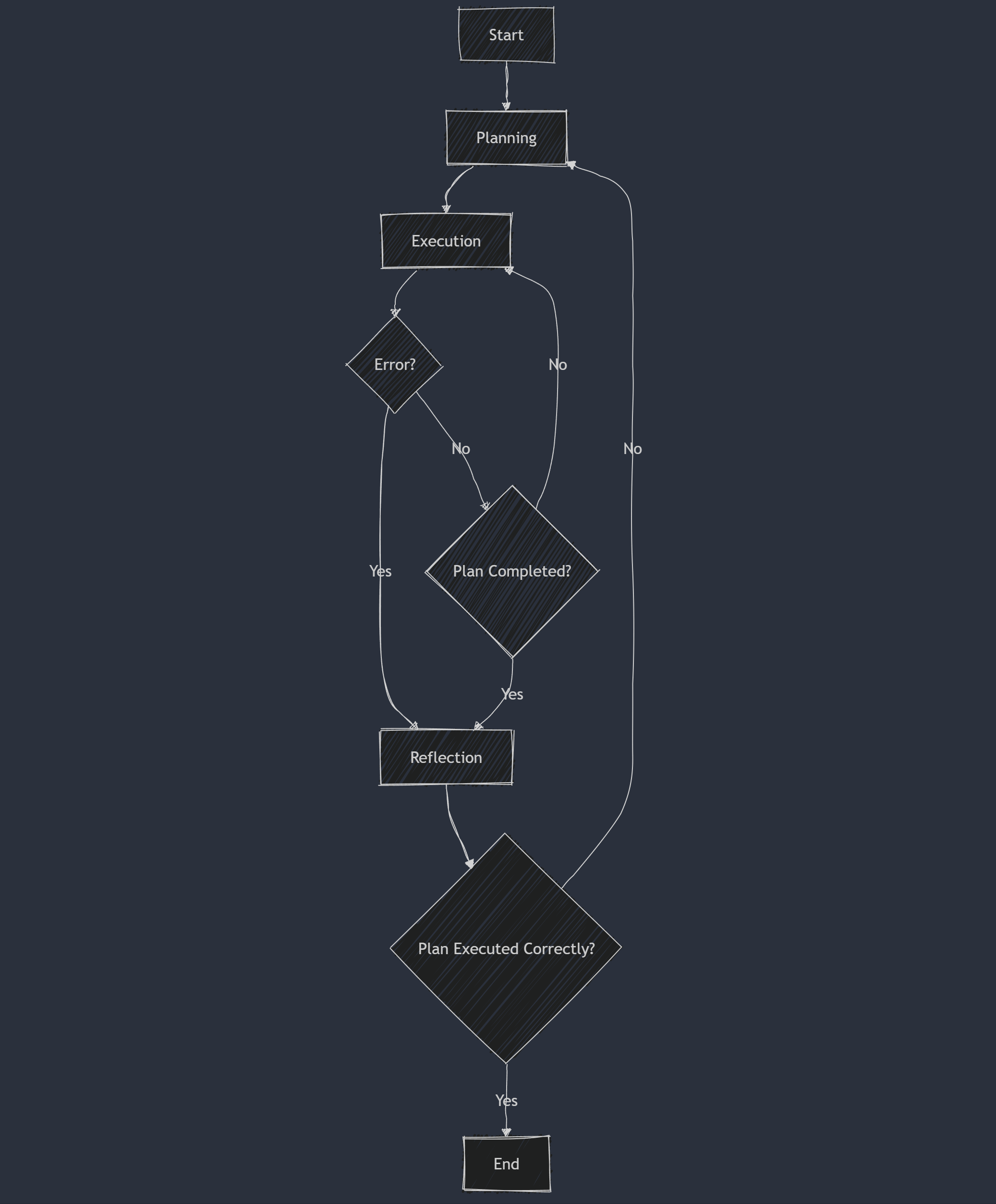

More advanced AI Agents are typically structured around three core components that collaborate seamlessly to achieve their objectives:

-

Planning: This phase involves the development of strategies and the identification of necessary steps to accomplish specific goals. Planning requires the AI Agent to analyze the task at hand, consider various scenarios, and outline a clear path forward. Advanced planning often involves predictive models and simulations to anticipate potential outcomes and adjust strategies accordingly.

-

Execution: Once a plan is in place, the AI Agent moves to the execution phase, where it implements the planned actions and processes the tasks. This phase involves interacting with various systems, executing commands, and monitoring progress. The execution phase can range from automating routine tasks to handling more complex, dynamic interactions.

-

Reflection: After the execution of tasks, the AI Agent enters the reflection phase, where it analyzes the outcomes and evaluates the effectiveness of its actions. This involves assessing performance metrics, gathering feedback, and identifying areas for improvement. Reflection helps the AI Agent learn from its experiences, adapt to new information, and refine its strategies for future tasks.

🎭 Multi-Agent Systems and Collaboration

As AI technology advances, we’re seeing the emergence of multi-agent systems where multiple AI Agents collaborate to solve complex problems. This paradigm opens up new possibilities:

-

Agents with different specializations can work together, combining their strengths to tackle multifaceted challenges.

-

Collaborative problem-solving can lead to more robust and creative solutions.

🚧 The Future Directions

Looking ahead, the future of AI Agents holds exciting possibilities. As technology progresses, we can expect more sophisticated AI Agents with enhanced cognitive abilities, greater autonomy, and improved adaptability. The integration of advanced techniques such as reinforcement learning, neural networks, and advanced natural language processing will further expand their capabilities and applications.

💡 Practical Use of AI Agents

Mindful Day AI demonstrates that creating basic AI agents is not difficult, and their practical applications and capabilities are vast. This simple application utilizes AI agents to provide real-time weather forecasts and generate daily plans based on weather conditions and your calendar. This project highlights how easily we can implement AI to enhance our daily activities.

Want to learn more? Visit the project page: Mindful Day AI