The world of AI is constantly evolving, and new standards are emerging that will shape its future. One such groundbreaking development is the Model Context Protocol (MCP), a new way for Large Language Models to communicate with the outside world, promising to make AI agents more powerful and versatile than ever before.

MCP is a Revolution! But What Exactly Is It?

Communication is incredibly vital in the world of computing, spanning everything from intra-processor interactions to network communications between multiple computers. Over decades, brilliant minds have developed communication protocols that precisely define how data is structured and transmitted, and what responses can be expected. These are well-conceived standards that prevent us from reinventing the wheel, allowing us to focus on enhancing existing standards for better performance and security.

The same applies to Large Language Models (LLMs) and the AI Agents built upon them. Previously, if an agent needed to use a tool like a calculator, I would have to build a system that communicates with an external service (e.g., Wolfram Alpha) via an API (which is, itself, a protocol). I would then need to describe to the LLM how to use this system and thoroughly test the integration. This process was often time-consuming and prone to errors or oversights.

This changed when Anthropic introduced the Model Context Protocol (MCP). MCP offers a standardized way to manage interactions between LLMs and external sources of information or capabilities, providing:

- Universal access to data and tools: Simplifying how LLMs connect to diverse external resources.

- Bidirectional, secure communication: Ensuring that data exchange is both safe and allows for two-way interaction.

- Scalability and interoperability: Designing a framework that can grow with needs and work across different systems.

MCP acts as an intermediary between an LLM that requires external context and the source of that context. It’s a standard that enables data from the LLM to be seamlessly used to invoke a specific method with given parameters. The result of this invocation is then returned to the LLM in a format it anticipates. This streamlines the integration of external functionalities, making AI agents more powerful and easier to develop.

Thanks to this structured approach, many MCP servers have emerged that are incredibly easy to integrate. This allows LLMs to access a multitude of services in a remarkably simple way. A list of notable and appreciated MCP servers can be found here: awesome-mcp-servers. These servers significantly streamline the creation of AI agents.

But That’s Not All! Cursor + MCP

The MCP client is also a crucial component. An increasing number of programs are adding this capability, and a list of them can be found here: awesome-mcp-clients. Currently, the most popular solution seems to be Claude Desktop – likely because it was initially the only MCP client available.

Personally, I use Cursor daily, which has a setting to add MCP servers. I use two:

{

"mcpServers": {

"context7": {

"command": "npx",

"args": ["-y", "@upstash/context7-mcp@latest"]

},

"brave-search": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-brave-search"],

"env": {

"BRAVE_API_KEY": "YOUR_API_KEY"

}

}

}

}Context7 is the most wonderful thing I’ve encountered recently. It’s a service that provides a collection of up-to-date documentation and the ability to search through it. The documentation is tailored for LLM analysis. This incredibly streamlines working with code and significantly reduces the phenomenon of hallucinations, thereby saving us time on searching for information in documentation.

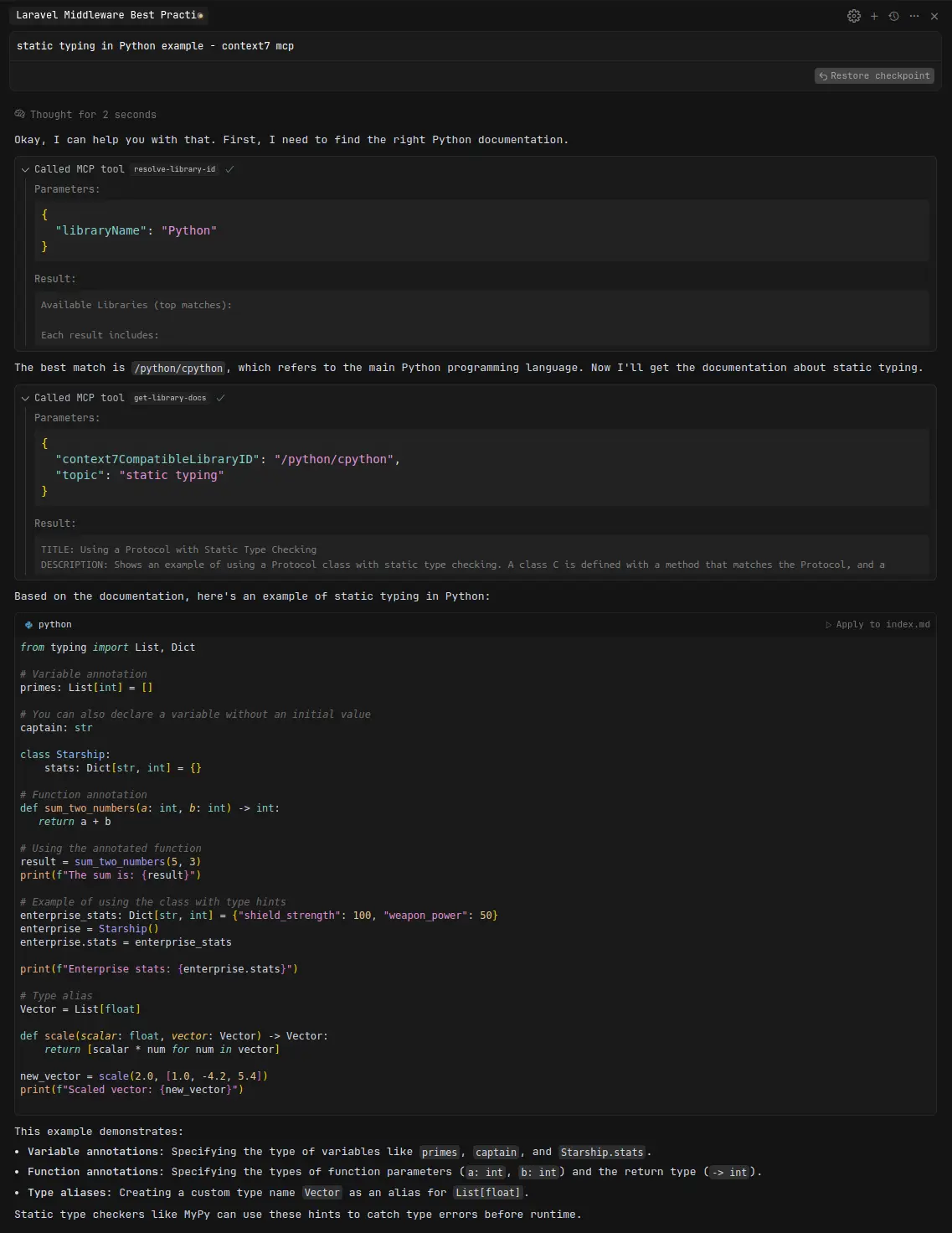

The screenshot below shows MCP in action. Initially, the model decided to ask MCP about available documentation. As a result, it received a list of documentation related to our query. The model’s second decision was to select the documentation and send another query to MCP, this time searching for specific information within the documentation related to the query. Based on the result of this search, the model provided us with an answer. Thanks to the additional context from the documentation, the model gave us a very good response.

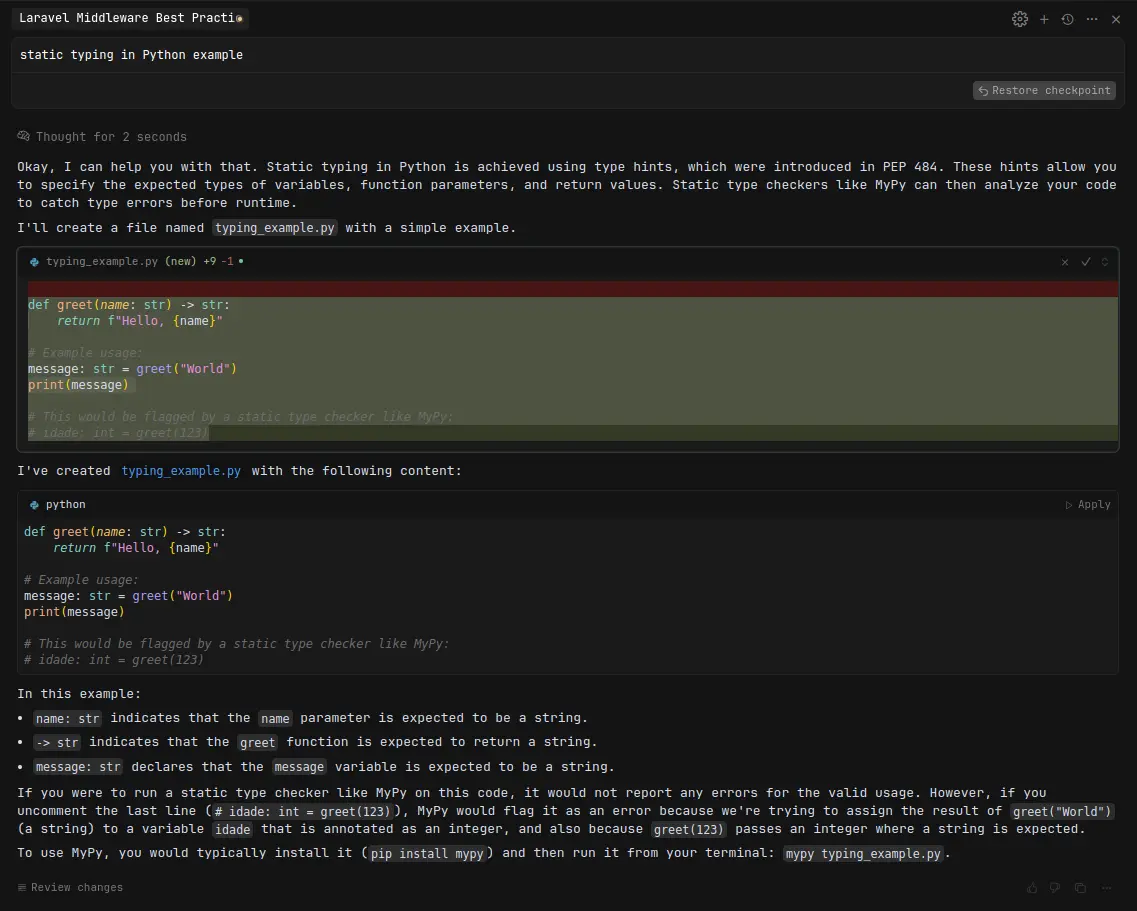

Below, for example, I show how the same model (gemini-2.5-pro-preview-05-06) performed but without the additional context. The quality of the answer is dramatically worse. This illustrates the crucial role that additional context plays for LLMs.

The second MCP I use is Brave Search, which allows searching for information on the internet. I know that Cursor has a built-in function for this, but using MCP for this task, in my opinion, yields better results.

This demonstrates the immense potential of this solution – in literally 5 minutes, I added two tools to my daily text editor that significantly improve its results. The best part is that the number of MCP servers is constantly growing, and there’s no sign of this changing. So, it’s possible we are witnessing the creation of a new standard that we will be using for years to come.

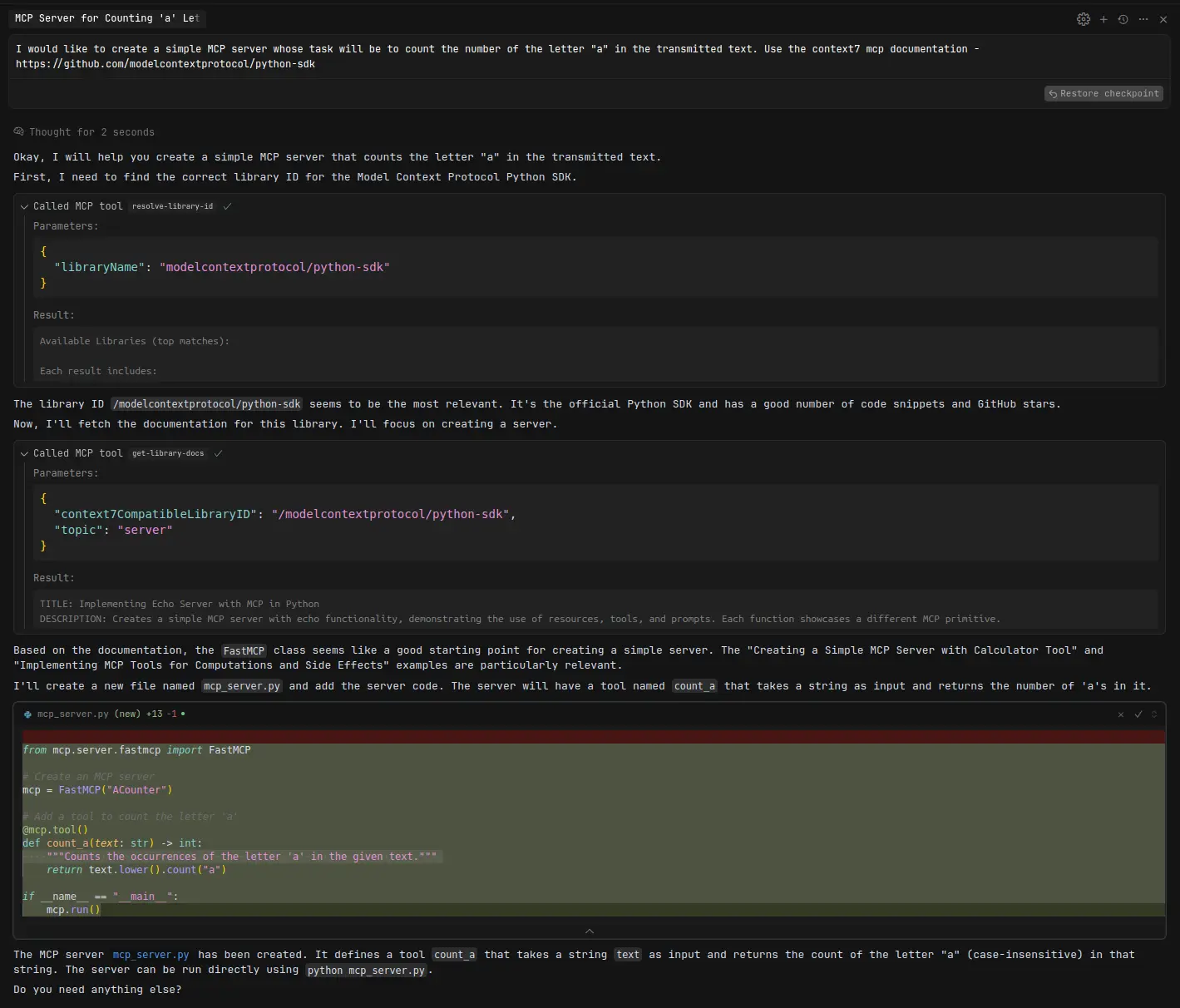

You can build your own MCP server

You can create your own MCP server using the Model Context Protocol - you can also get help from AI and documentation from context7:

Yes, it’s that simple! The process of creating it is similar to creating API endpoints, which means we can quickly create AI agents that use MCP servers.